Bonjour,

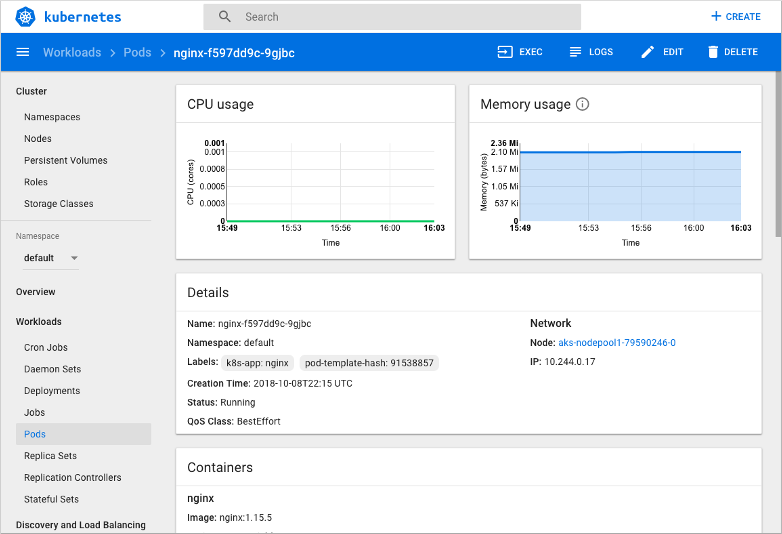

Dans cet article nous allons voir ensemble comment “désactiver/supprimer” le dashboard de votre cluster AKS.

Pour cela, je vous invite à utiliser la commande suivante:

az aks disable-addons -a kube-dashboard --resource-group Nom_RG --name Nom_AKS_Cluster

Bonne journée,

Maxime.