This year’s KubeCon in Atlanta brought together some of the most vibrant and forward-looking voices in the cloud-native ecosystem. Following an insightful and energetic Cloud Native Rejekts conference where the community pushed boundaries and shared unfiltered technical expertise, it was time for KubeCon, the global stage where these ideas scale, mature, and inspire real-world change.

KubeCon + CloudNativeCon North America 2025 also marked a milestone in my personal journey. It was my first time attending as a CNCF Ambassador, and I had the privilege of contributing as a speaker not once, but twice. It was a meaningful and transformative experience, and I’m excited to share what made this edition truly exceptional. With thousands of attendees from across the globe, dozens of tracks spanning everything from platform engineering and security to storage and edge compute, and the usual surge of side-conversations and hallway meetups, the energy was palpable.

I also had the opportunity to join the Platform Engineering Coffee Meetup for the first time a valuable learning experience and a surprisingly engaging discussion to kick off the day at 7 AM (outch!).

As a contributor to Kubernetes security eco-system and an active member of the cloud-native community, I arrived with two hats: attendee and speaker (twice). This event felt like a clear reflection of how fast our ecosystem is evolving, and how cloud-native security is reshaping itself alongside platform engineering, AI, and runtime detection.

Preparing and delivering these talks was an incredible learning experience. The KubeCon audience is uniquely engaged filled with practitioners who ask thoughtful questions and share their own experiences. The conversations that continued in the hallway track after my sessions were just as valuable as the presentations themselves.

Several themes dominated the conversations at KubeCon Atlanta 2025:

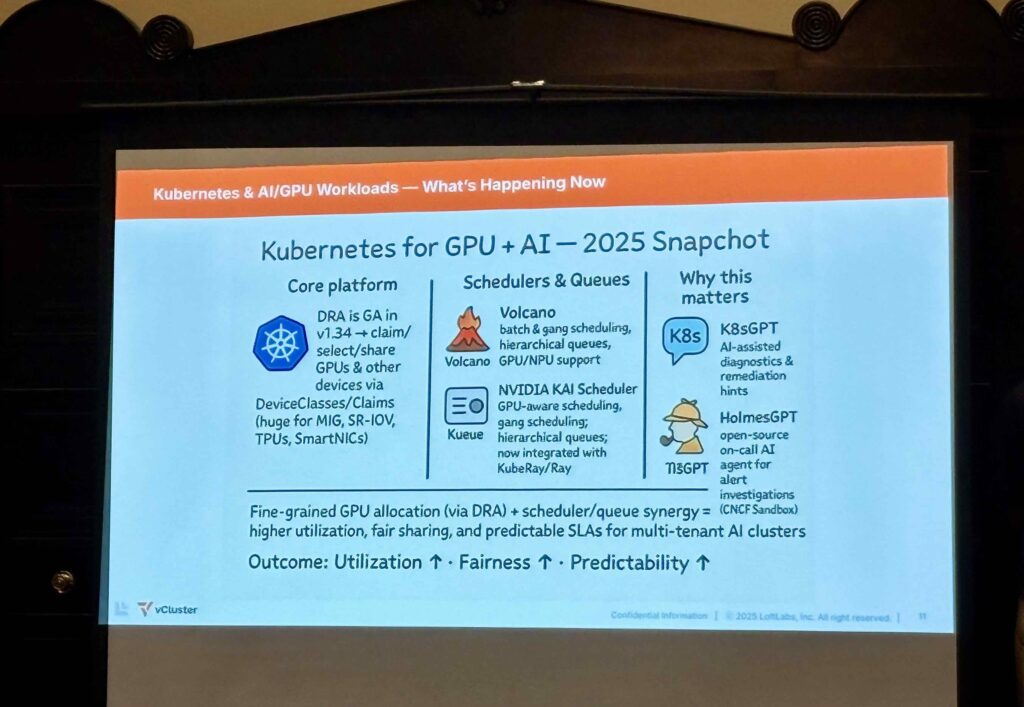

AI + Kubernetes Integration

- Kubernetes shifting from orchestration to GPU-native AI operating substrate

- Focus on dynamic GPU partitioning (MIG, vGPU) to reduce cost

- NUMA/topology-aware scheduling for low-latency training and inference

- Standardized device plugins across NVIDIA, AMD ROCm, Intel

- Autoscaling based on tokens & latency, not HTTP metrics

- Model, dataset, and embeddings treated as signed supply-chain artifacts

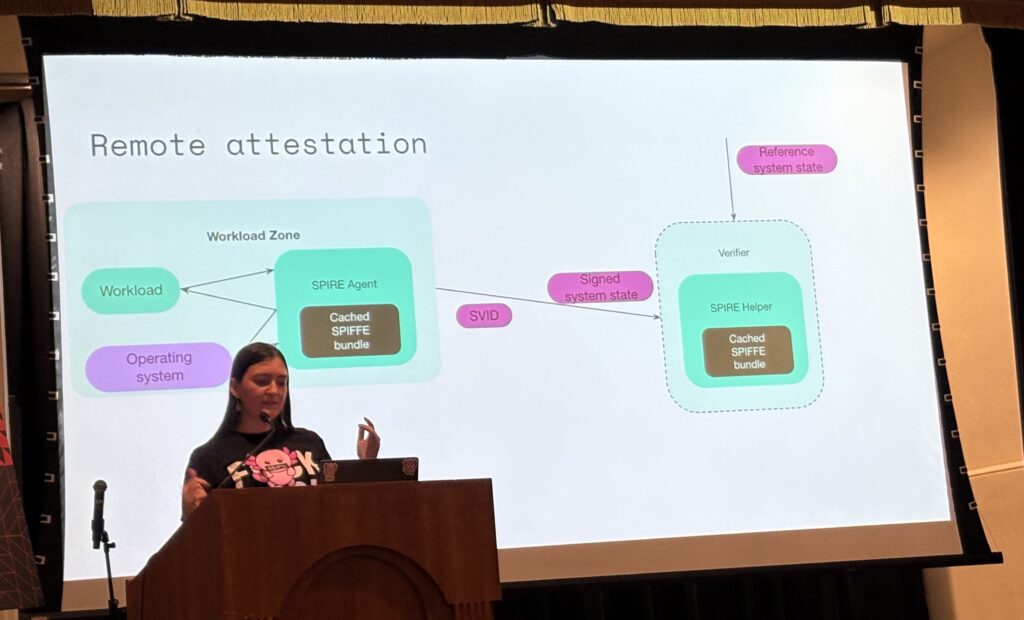

- Identity-backed access to models and GPUs leveraging SPIFFE/SPIRE

Platform Engineering for Multi-Tenant AI

- Internal platforms must enforce GPU quotas, tenancy, and access control

- Integration of model registries with RBAC + SPIFFE/SPIRE identities

- Dataset lineage + provenance manifests as mandatory artifacts

- Policy-as-code for inference filtering (prompts, outputs)

- SLSA-based model pipelines (dataset → training → signed model → inference)

- Golden paths now include GPU profiles, dataset hashing, autoscaling hints

- Tenant isolation + workload identity using SPIFFE/SPIRE across pipelines

Zero Trust for AI Workloads

- Zero trust applied to models, datasets, and GPU hardware

- SPIFFE/SPIRE used for identity-bound GPU access + model attestation

- Dataset poisoning considered a CI/CD security risk

- Prompt abuse across shared tenants treated as data leakage vector

- GPU side-channel attacks via shared memory and plugins

- Model exfiltration prevention using signed registries + identity controls

eBPF for AI Observability & Security

- Collection of GPU telemetry with near-zero overhead

- Detection of anomalies in token-level latency and inference cost

- Monitoring PCIe/NVLink/GPU bandwidth for distributed training

- Real-time introspection into vector-heavy pipelines

- eBPF + SPIFFE/SPIRE integration emerging for identity-aware telemetry

Core Takeaway

- Kubernetes is evolving into a GPU-native, AI-governed platform

- AI demands reshaping scheduling, platform engineering, and security models

- SPIFFE/SPIRE is becoming the identity backbone of AI infrastructure

- The future of Kubernetes is AI-native by design

Must-Watch Sessions

- Building the Next Gen GitOps-based Platform With ConfigHub – Erick Bourgeois & Alexis Richardson

- AI-Assisted GitOps With Flux MCP Server – Stefan Prodan, ControlPlane

- Git Push, Sit Back: Level up AKS Deployments With Flux V2 – Dipti Pai, Microsoft

- Stairway To GitOps (feat. Production at Morgan Stanley) – Tiffany Wang & Simon Bourassa

- Flux – The GitLess GitOps Edition – Stefan Prodan, ControlPlane & Dipti Pai, Microsoft

- Managing a Million Infra Resources at Spotify: Designing the Platform to manage change at scale Oliver Soell & Fredrik Sommar

- On the Origin of Platforms: Evolution of a Capital One Enterprise Platform Bradley Whitfield & Jacob Walden

- Real-World Strategies for Cutting Kubernetes Costs: Why One Size Doesn’t Fit All – Dolis Sharma

- Supercharging Backstage Scaffolder for Workflows – Jonathan Chan & Francis Hackenberger

- The Journey of Deploying Backstage in a Large Organization – Mathieu Girard & Teddy Poingt, Beneva

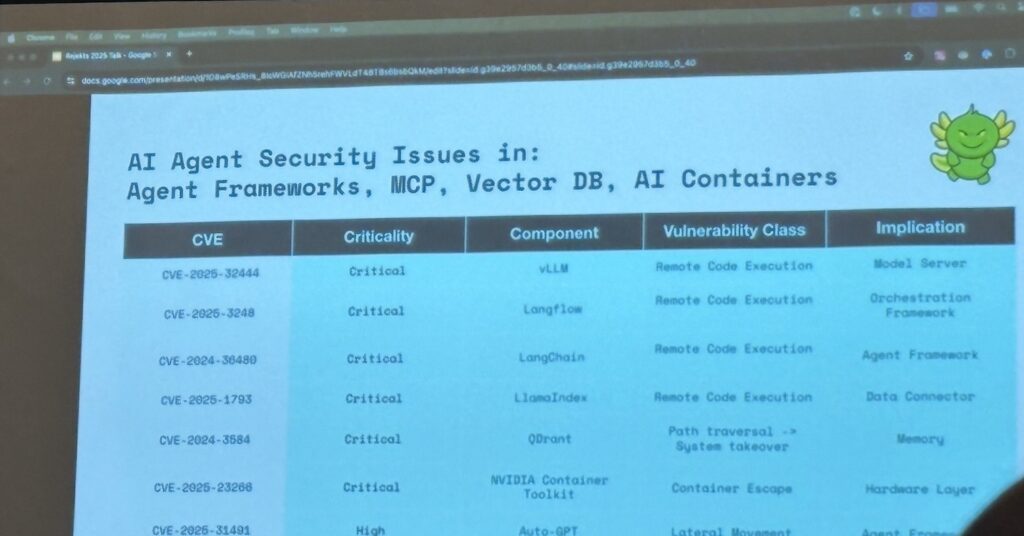

- The Good, the Bad, and the Ugly: Hacking 3 Cloud Native AI Service with 1 vulnerability Hillai Ben-Sasson & Nir Ohfeld

- Shift Left in Action: Automating CIS Compliance With Kyverno and CNCF Power Tools Yugandhar Suthari

- Building PCI Compliant Kubernetes Platforms – Demystified, fun and possible Julien Semaan & Tarun Chinmai Sekar

- OSCAL in Action: Real World Examples of Automating Policy & Compliance Jennifer Power & Hannah Braswell

- Your Kubernetes Playbook at Your Fingertips: Advanced Troubleshooting with mcp, rag, and k8sgpt David vonThenen & Yash Sharma

- SAFE-MCP: A Security Framework for AI+MCP (Model Context Protocol) – Frederick Kautz, TestifySec

Looking forward to seeing everyone at future KubeCon events. The next stops are Amsterdam (Europe 2026), Mumbai (India 2026), and Yokohama (Japan 2026)!

Maxime.