After speaking last year at Cloud Native Rejekts Salt Lake City 2024 with Mathieu on “Platform Engineering Loves Security: Shift Down to Your Platform, not Left to Your Developers!”, I returned to Cloud Native Rejekts North America 2025 in Atlanta, this time as an attendee, eager to reconnect with the community, discover new research, and exchange ideas on the evolution of Kubernetes Security, AI integration, and platform engineering.

Cloud Native Rejekts has always been a special event for me. It’s intimate, technically rich, and community-driven, the perfect pre-KubeCon gathering where bold ideas and unfiltered discussions shape the future of our ecosystem.

Atlanta’s edition had an incredible mix of platform engineers, SREs, Devs, and security practitioners from across North America and beyond. What I love most about Rejekts is the raw energy talks are deeply technical, hallway conversations turn into architecture sessions, and everyone genuinely wants to share and learn.

The venue setup encouraged collaboration, and the diversity of topics from runtime isolation to AI-driven observability, reflected just how fast our space is evolving.

My Top 5 Highlights from Cloud Native Rejekts 2025

1. Catch Me If You Can: A Kubernetes Escape Story

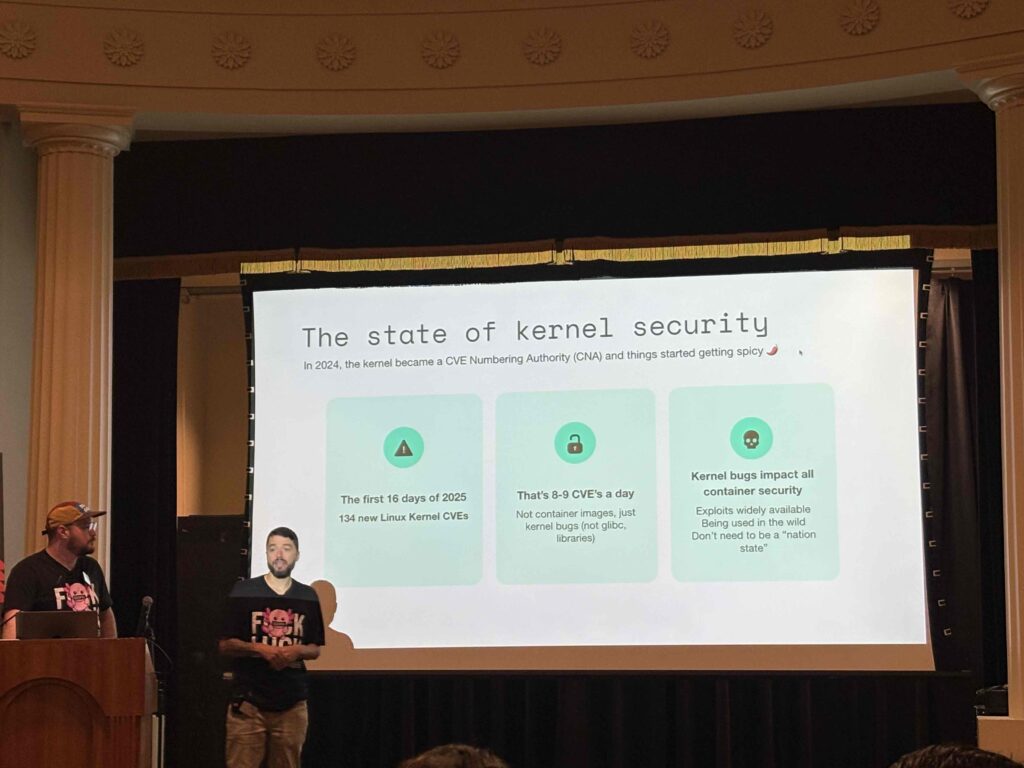

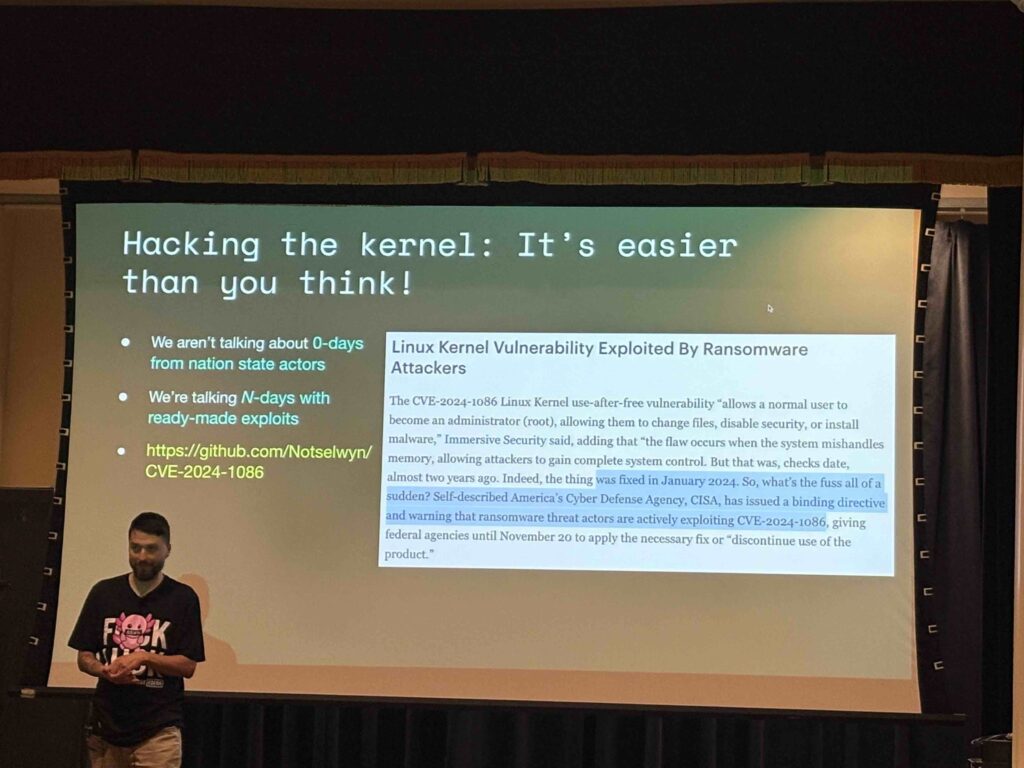

A live, show-stopping demo by Jed Salazar and James Petersen revealed the anatomy of a real-world container escape from the initial breakout to lateral movement across a Kubernetes cluster. The session unpacked how weak isolation, misconfigured permissions, and monitoring blind spots open the door to stealthy takeovers, and how defenses like user namespaces in Kubernetes 1.33, capability hardening, and runtime detection close it.

Through a step-by-step attack reconstruction, the speakers connected kernel-level exploits to cluster-wide compromise, then flipped the lens to show how to build multi-tenant isolation, detect breakout signals early, and contain the blast radius.

A must-watch for anyone serious about runtime hardening and defense-in-depth in Kubernetes.

2. Beyond the Default Scheduler: Navigating GPU Multitenancy in the AI Era

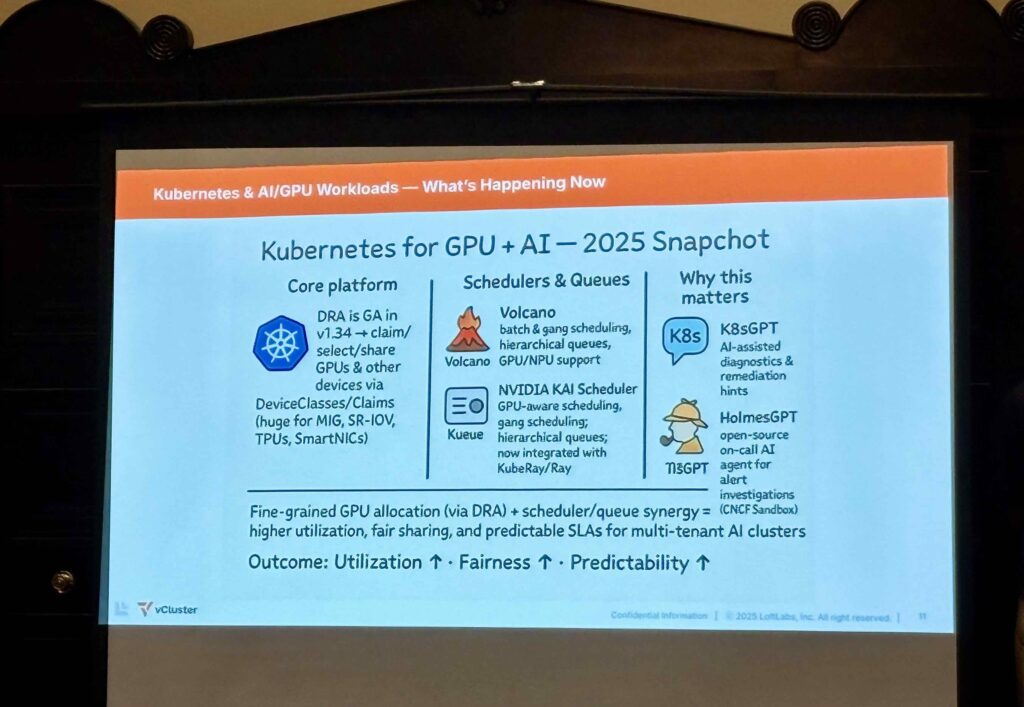

Shivay Lamba, Hrittik Roy, and Saiyam Pathak explored one of the toughest challenges in AI infrastructure: secure GPU sharing.

They broke down how time-slicing improves utilization but weakens isolation and why NVIDIA MIG’s hardware partitioning (cores, memory, L2 cache) is a game changer.

By leveraging schedulers like KAI, Volcano, and Kueue, they showed how to build secure, fair, and efficient multi-tenant GPU clusters that can power the next generation of AI workloads.

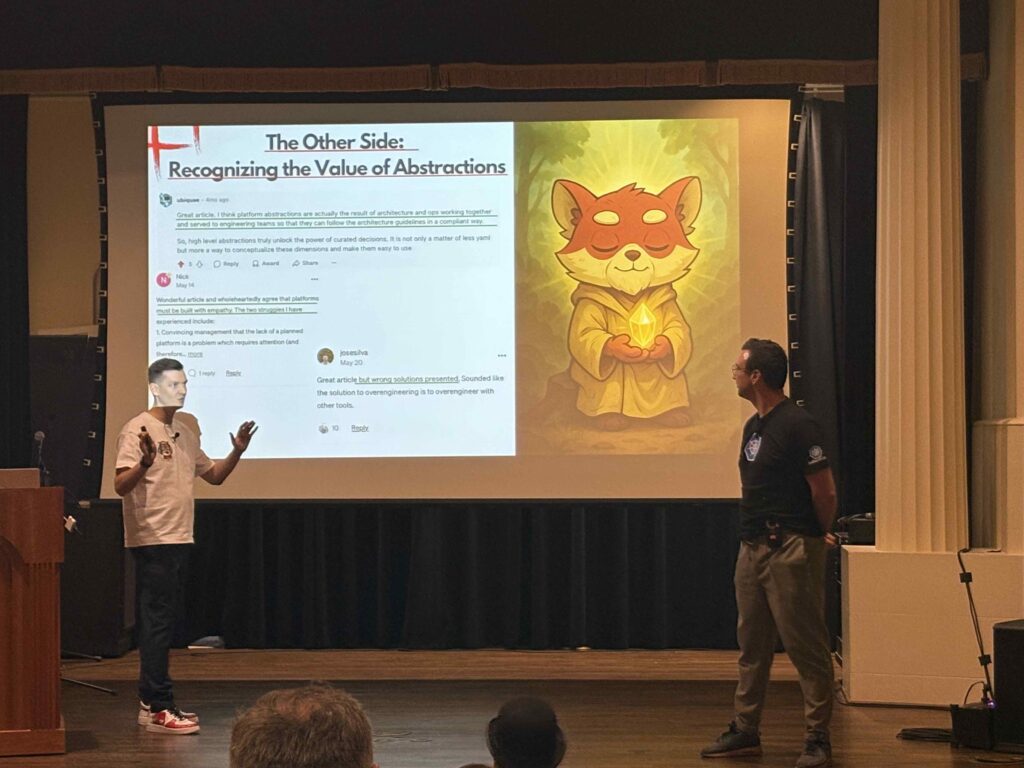

3. Make Your Developer’s Pains Go Away, with the Right Level of Abstraction for Your Platform

Mathieu Benoit and Artem Lajko tackled a reality every engineer knows too well: developers don’t spend their day coding, they spend it battling TicketOps, infrastructure blockers, and security gates.

Their talk presented a battle-tested approach to building Internal Developer Platforms (IDPs) with empathy, powered by Score and Kro.

The key takeaway: successful platforms don’t hide Kubernetes, they abstract it at the right level.

By combining GitOps workflows with automation, they demonstrated how developers can deploy secure, production-grade workloads effortlessly focusing on their apps while the platform handles the hard parts behind the scenes. It wasn’t about YAMLs or GitOps, it was about developer joy.

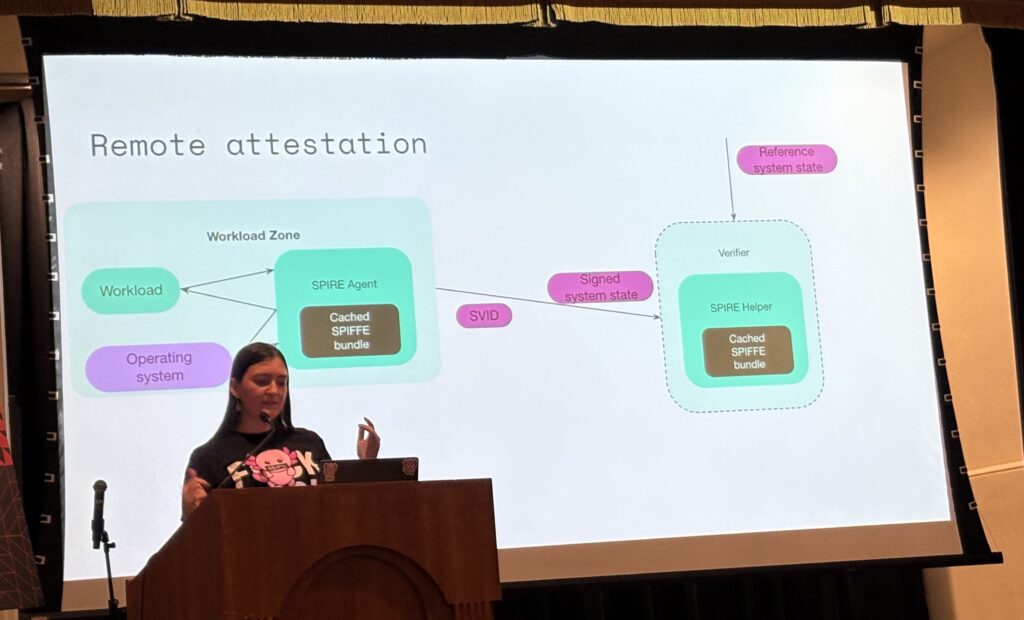

4. In-SPIRE-ing Identity: Using SPIRE for Verifiable Container Isolation

Marina Moore delivered a brilliant session on cryptographic attestation for workloads using SPIFFE/SPIRE. Edera’s architecture lets teams prove that workloads run in isolated zones with end-to-end encryption and non-falsifiable build provenance essentially, identity as a security perimeter.

Her insights into deployment challenges and configuration trade-offs offered a roadmap for teams moving toward verifiable workload trust in cloud-native systems.

behind the scenes. It wasn’t about YAMLs or GitOps, it was about developer joy.

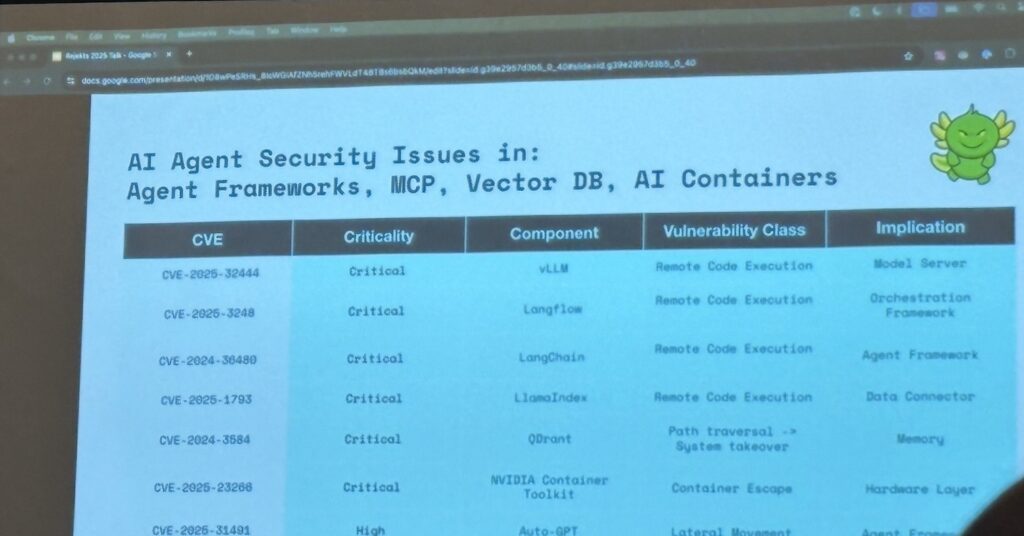

5. The Paranoid’s Guide to Deploying Skynet’s Interns

This talk is a reality check for anyone deploying AI agents in production. While autonomous agents are powerful, they’re being plugged into legacy or unsecured architectures, a recipe for chaos.

The speaker (Dan Fernandez) breaks down the anatomy of AI agent ecosystems (Agents, MCP servers, Tools, and Memory) before exposing the major security pitfalls:

- Tangled Web of Trust: Agents interact with tools and data sources of mixed trust levels, risking internal system compromise.

- Persistent Threats: Because agents “remember,” attacks can persist, evolve, and resurface over time.

- Amplified Supply Chain Risks: Every autonomous action turns dependencies into potential attack vectors.

- Compounding Complexity: Multi-agent comms and centralized MCPs obscure visibility and weaken control.

The key takeaway: treat AI agents as untrusted, dynamic supply chains. Apply strict segmentation, isolation, and defense-in-depth to every component from MCP servers to memory stores. Paranoia isn’t overkill here, it’s essential for survival in the era of autonomous AI.

Networking and Shared Purpose

Beyond the sessions, hallway conversations were pure gold.

I had deep discussions about:

- Integrating Kubernetes security controls within Platform Engineering and Internal Developer Platforms (IDPs) to deliver secure-by-default services while maintaining developer velocity.

- Measuring platform security maturity using structured threat models and practical scorecards.

- Embedding AI-driven risk assessment directly into CI/CD pipelines for continuous validation.

Watch the Replays

- Theater Sessions

- Crystal Dining Room Sessions

It’s clear that the Cloud Native Rejekts community thrives on transparency, mentorship, and shared improvement, values that continue to guide my own journey in cloud-native security.

And finally a heartfelt thank you to all the volunteers who made this event possible. Your passion, generosity, and dedication are what make Cloud Native Rejekts such a unique experience. It’s more than a conference it’s a community space where creativity meets curiosity, where ideas grow into open-source projects, and where the next wave of cloud-native innovation quietly takes shape.

Maxime.